Tech Giant Faces Ethical Scrutiny Over Military Applications

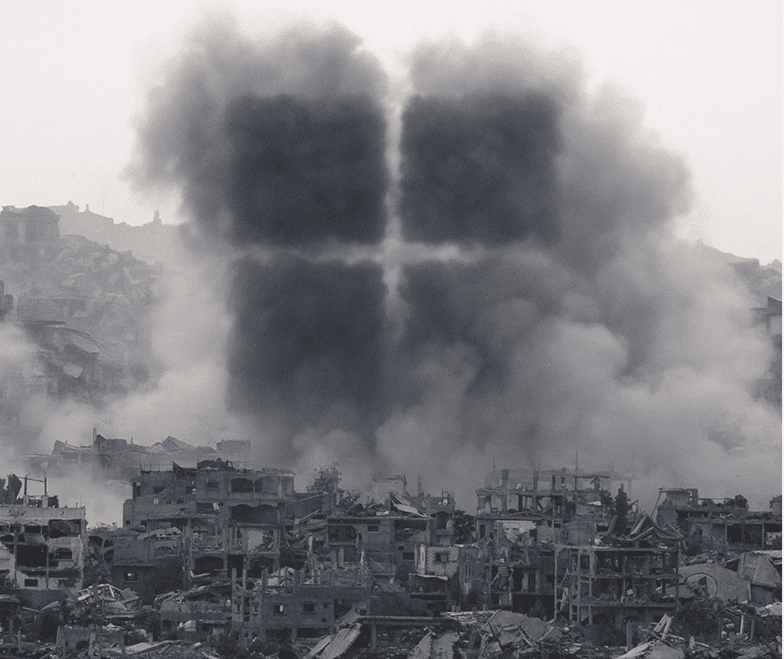

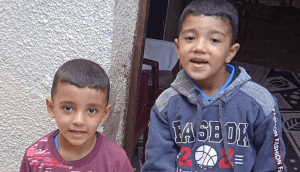

Microsoft is facing intense backlash after reports revealed its technology played a critical role in the recent Gaza conflict. Despite its public commitment to ethical AI, the company’s tools have reportedly been used by Israeli forces in controversial military operations. This revelation has sparked widespread criticism from human rights advocates, tech ethics experts, and even its own employees.

AI Tools in Modern Warfare

According to reports, Microsoft’s Azure cloud platform and OpenAI’s GPT-4 were deployed by elite Israeli intelligence units, including the highly secretive Unit 8200. Between June 2023 and April 2024, the Israeli military’s use of Microsoft’s cloud services surged by over 20%, from an already substantial 155% increase in previous years. These tools reportedly enabled everything from logistics management to real-time analysis of battlefield data, raising serious ethical questions for the tech giant.

Internal Dissent and Employee Backlash

The revelations have triggered internal unrest within Microsoft. Employees launched the “No Blue for Apartheid” campaign, protesting the use of company technology in conflict zones. Some staff members reportedly faced termination for their involvement, intensifying concerns about corporate responsibility. These internal protests underscore the growing tension between profit-driven innovation and ethical considerations.

Corporate Accountability and Future Challenges

In response to the backlash, Microsoft has publicly reaffirmed its commitment to human rights and responsible AI use. In a recent statement, the company emphasized that its technologies are “general-purpose tools” not designed for harm. However, critics argue this stance rings hollow, given its extensive defense contracts and long-standing support for Israel’s cybersecurity infrastructure.

As AI becomes an increasingly powerful force in global geopolitics, Microsoft faces mounting pressure to clarify its ethical boundaries. The controversy highlights the complex moral landscape that tech companies must navigate as their innovations are repurposed for military applications.

The Broader Impact on the Tech Industry

The Microsoft controversy comes at a time when the tech industry is grappling with broader ethical challenges. As AI systems become more capable, their potential for misuse grows, raising questions about accountability, transparency, and the responsibility of tech companies to ensure their products are not used to violate human rights.

Tech firms like Microsoft, Google, and Amazon have been increasingly involved in defense projects, sparking debates over the militarization of AI. For Microsoft, this latest controversy may serve as a critical test of its corporate values and long-term reputation.

Calls for Greater Oversight

Human rights groups and tech ethics experts are calling for greater oversight and transparency in the deployment of AI technologies. They argue that companies like Microsoft must take proactive steps to ensure their tools are not used to fuel conflicts or suppress vulnerable populations.

The “No Blue for Apartheid” campaign has intensified these calls, with some employees demanding clearer ethical guidelines and stronger protections for whistleblowers.

Looking Ahead

As the debate over the role of AI in modern warfare continues, Microsoft faces a pivotal moment. The tech giant must now decide whether to double down on its defense contracts or take a stand for ethical technology use. With pressure mounting from employees, activists, and the public, the stakes are high for one of the world’s most influential technology companies.

Microsoft’s response to this crisis will likely shape not just its future, but also the broader conversation about the ethical responsibilities of tech giants in the 21st century.

Comments are closed.